It’s Over 9000

The Backdrop

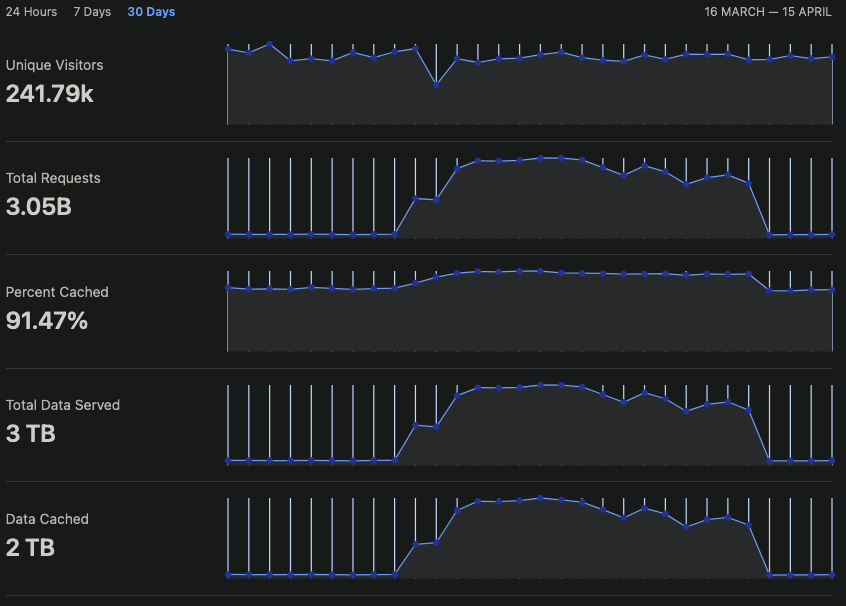

In early 2024 a user decided that they /really/liked my Blackbox API. They and many others decided to blow my little cluster out of the water and ship an astonishing 3 Billion requests in a single month. All of this was handled timely with only 6 old E3 servers and some caching magic. Funny enough, this wasn’t even the first time my cluster had handled a rush like this.

The Origins

Blackbox Proxy Block was originally a project of Mind-Media.com. BBPB, for short, was used by me and many others to determine if someone was using a Proxy/VPN. I used it extensively in the days of running a Team Fortress 2 server group for the detection and blocking of people trying to avoid IP-based bans. Around August 2019 Mind-Media, and the original creator, decided BBPB would be discontinued and shelved permanently.

The Spirital Successor

With some of my knowledge in ASNs, IP Addresses, and general programming I figured I could take on and create a successor to this project. With that, the idea of Blackbox was created. Initially, I decided to take the same steps the original author had and simply use PHP with a MySQL backend. Taking the data that was left over and meshing it with some of my know-how with ASNs I created my flavor on the project in a few days of work. Making sure to follow his schema, I made a drop-in-replacement to his service. The Blackbox API was created, and as I advertised to the original owner I’d gladly become its spiritual successor. He gladly accepted and began to soon after forward requests to my service.

Cloudflare

I’ve long been a user of Cloudflare. I initially used it to cache some map assets I had for Team Fortress 2 servers. It seemed like a great fit for Blackbox as well. With the requests being predictable, stable, and uniform I decided to try out a 30-day cache. With the cache in place, I saw a significant drop in requests as many users simply requested the same information over or repeatedly. This excess, and already processed, load simply vanished into the cached content hosted by Cloudflare. Frankly, it wasn’t even an insignificant amount of traffic either as nearly 50% of requests at the time were handled by Cloudflare. However, I expect Mind-Media had done something similar in hopes of abating the load they were experiencing.

NodeJS + PostgreSQL

Mind-Media mentioned that they needed to remove the strain on their servers when they initially discontinued the project. It didn’t take me long to realize why, and frankly, it was a glaring problem. The API requests, all-be-it simple requests, were very taxing on MySQL. At the time, MySQL needed to manually search through the entire database for each request. Making it excessively slow. PHP didn’t help much, if at all, because it is single-threaded and synchronous. As I added initial functionality I saw my request times bloom into the thousands of milliseconds.

Something needed to be done about this, and the first step was removing PHP. I had dabbed in NodeJS and Javascript before this in my day-to-day job. It took me a bit of trial and error, but I quickly learned the power of an async code base. Soon I dropped requests times down hundreds of milliseconds, but this was a mere 10s of percentile that I had shaved off.

It didn’t take me too long, although with a bit of pushing by others, to investigate the slow request times to realize the biggest issue was MySQL itself. Realizing the issue I began to look around for a better database for the project and landed on PostgreSQL which featured network address types. This soon became the secret sauce to our success as I trialed out the change. It didn’t take long to realize how much faster PostgreSQL was going to be for the project and I quickly swapped it in place of MySQL.

At this point, I was serving 100s of requests per minute in hundreds of milliseconds or less, instead of thousands.

Docker to K3s

The next hurdle came initially as a complaint of downtime. Every day I needed to upgrade my PostgreSQL database with the newest data, but in doing so I caused a minor outage. This was namely because the service was single-homed on a Docker host. It was convenient, KISS (keep it simple stupid) approved and functional. However, with increasing loads, it became clear that it wouldn’t scale well. Large surges, 100s/requests/sec, would typically bog down the server for a few moments before regaining its foothold. I knew with Kubernetes I could get something better and more scalable.

I eventually settled on K3s as my choice and the initial Dragon cluster was born. The Dragon cluster didn’t take long to impress with its sharded capacity at my fingertips I dropped request times to the 10s of milliseconds. Though Dragon didn’t last, as I eventually rebuilt it into many different revisions the premise of it stayed the same. Dragon turned into Phoenix, Phoenix turned back into Dragon, and finally, Dragon turned into Pepper.

Returning to the Present

As I sit here looking at my quite pretty and yet explosive Cloudflare graphs, I come to appreciate a few things specifically; My trials, my general knowledge, my willingness to try new things, and my persistence. Without them, I doubt I could have handled the below surge.

This surge, though not the first, was heavily handled by the caches of Cloudflare. Without them, I likely would have needed ten times the amount of infrastructure. The remaining, uncached, requests were easily handled by 6 old, and mostly cheap, E3 dedicated servers. K3s being the glue that allowed me to use all their compute and allowing me to share the load over multiple servers. Finally, each incremental improvement I made to the service even if it initially was small, such as going to NodeJS over PHP, made a massive impact to help handle the request load.